Just a quick post here, for those management pack developers that have run into the problem of simulating a workflow using the Visual Studio Authoring Extensions.

I have been missing the Workflow Analyzer companion to the MP Simulator for QUITE SOME TIME. I’ve tried troubleshooting the problem on several occasions, probably spending more than 10-12 hours burning midnight oil over the past months researching, debugging, sifting through logs and many Stack Overflow pages regarding .NET exceptions.

I’ve uninstalled and installed again the VSAE, and probably Visual Studio as well at some point, to no avail – the catastrophic behavior of Workflow Analyzer was clearly the bane of my development work. “The system cannot find the file specified?” What a benign message that is, especially when there is no file specified in the exception!

Just fixed it, though – out of what seems to be sheer luck.

I uninstalled Microsoft Monitoring Agent from my development workstation and installed the System Center 2012 SP1 agent – low and behold, the Workflow Analyzer sprung to life!

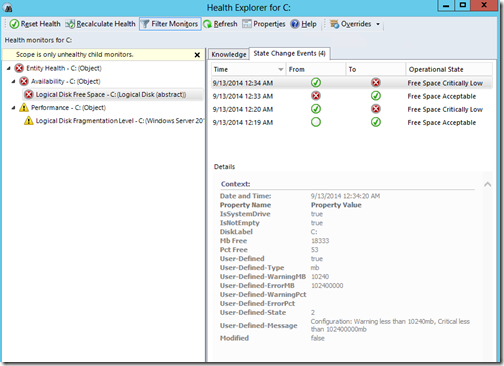

So happy now that I can actually see runtime data!

Here are a couple other references that didn’t provide a solution for me, but this issue seems very eluding and they might work in your case.